High performance computing is getting cheaper every year. But that doesn’t remove the burden of buying these systems on a regular basis when your organization demands ever-increasing computing power to stay competitive. That’s the dilemma a lot of commercial HPC users find themselves in as they wonder how often they should upgrade their HPC machinery. At least one company, Airbus, determined buying HPC systems wasn’t such a great deal after all.

Like all major aircraft manufacturers, Airbus uses high performance computing to support its engineering design work. The company employs it for all its engineering simulation work including wind tunnel aerodynamics, aircraft structure design, composite material design, strength analysis, and acoustic modeling for both the interior of the aircraft and the exterior engine noise. It’s also used in the embedded systems that run the avionics, environmental alert system, and fuel tank and pump calculations. To design these increasingly sophisticated aircraft and go head-to-head against competitors like Boeing requires lots of computational horsepower.

Like all major aircraft manufacturers, Airbus uses high performance computing to support its engineering design work. The company employs it for all its engineering simulation work including wind tunnel aerodynamics, aircraft structure design, composite material design, strength analysis, and acoustic modeling for both the interior of the aircraft and the exterior engine noise. It’s also used in the embedded systems that run the avionics, environmental alert system, and fuel tank and pump calculations. To design these increasingly sophisticated aircraft and go head-to-head against competitors like Boeing requires lots of computational horsepower.

Airbus determined that to keep up they would have to increase their HPC capacity — measured as price for a given number of flops — by a factor of 1.8 every year. The company employs a set of actual engineering codes to benchmark that performance and makes sure that newer HPC systems being considered for deployment fulfill that goal.

The secondary objective was to maximize price-performance. In 2007, after doing a the cost analysis, the Airbus bean counters decided it would make more sense for the company to rent HPC, rather than acquire the systems outright. Up until then, the aircraft manufacturer had bought their own HPC clusters, installed them in Airbus datacenters, and maintained them for the entire lifetime of those systems.

According to Marc Morere, who heads Functional Design IT Architecture & Projects group at Airbus, moving to a rent/lease model meant that the money that would have gone into buying equipment could now be applied to buying more HPC capacity. Or as Morere put it: “We prefer to use the costs for our aircraft program, rather than to negotiate with the bank.”

For HPC infrastructure in particular, they determined that it was better for them to pay in increments, rather than up front. Morere says if they finance HPC systems, they can depreciate the hardware, but those depreciation terms always run five years. Unfortunately, that’s two years longer than Airbus would want to actually operate the hardware. With a company goal of a 1.8-fold increase in HPC capacity each year, the recurring costs after three years became too high to justify keeping the older systems running. “The technology moves too quickly,” says Morere

In 2007, they first looked into a pure HPC on-demand model, where they would just buy compute cycles. But according to Morere, they couldn’t find a satisfactory solution with HP or any other vendor they talked with. The idea then morphed into a service model where HPC systems would be deployed outside of the Airbus datacenters and leased back to company.

The only real downside, when compared to the on-demand model, is that a service entails a flat fee, where you pay the same amount regardless of the available compute capacity consumed. On the flip side, it’s easier for the accountants to budget in a fixed monthly cost than one that could vary through time — based not just on changing computational needs, but also on the volatility of electricity costs and the more variable costs of labor.

In 2007 and 2008, they contracted IBM to host Airbus HPC systems off-site in IBM’s own datacenter. Airbus tapped into the systems remotely for their engineering simulations, but because of the distance between the Airbus research sites and the datacenter, network performance sometimes limited what could be accomplished .

Then in 2009, Airbus inked a deal with HP to install containerized Performance Optimized Datacenters (POD) on-site, but with HP running the infrastructure as a service. Although the PODs were on Airbus property, they didn’t require a datacenter habitat, so the containerized clusters could be set up virtually anywhere there was electricity and water. The HP service contract included all the hardware, system setup, maintenance, operation of software, cooling, UPS, and generators. HP even pays the electric bill. All to this is wrapped up in a monthly service fee they charge to Airbus.

Other bidders on the 2009 contract included IBM, SGI, Bull, and T Systems. Morere says in the end it came down to IBM and HP, with the others being too expensive for the type of all-inclusive service Airbus was interested in. According to Morere, HP was chosen because it had the best technical solution and the best price-performance.

The first phase of the HP contract resulted in the deployment of POD in Toulouse France in 2009. Another POD was added in Hamburg, Germany in 2010. The original Toulouse POD, based on Intel Nehalem CPUs was retired in August 2011.

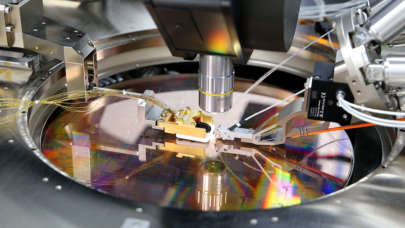

The Toulouse POD was replaced with two Intel Westmere-based PODs with the latest InfiniBand technology. That system, which currently sits at number 29 on the TOP500 list, went into production in July 2011. It consists of 2,016 HP ProLiant BL280 G6 blade servers, and delivers about 300 teraflops of peak performance. Although all those servers fit into two containers, each 12 meters long, they deliver the equivalent of 1,000 square meters of datacenter HPC.

Because the PODs in Toulouse are on Airbus premises, about 50 meters from the company’s main computer facility, they were able to link the HPC cluster to the machines in the datacenter with four 10GbE links. That kind of direct hookup delivered very low latency as well as plenty of bandwidth.

At this point one might ask, why Airbus even operates its own datacenters anymore? Currently the facilities are being used for application servers, storage, and database work. Some of these in-house systems include HP blades, but at this point, not PODs. All the pre-processing and post-processing for the HPC work is performed by these datacenter systems. But since these types of applications are not so performance bound, the servers there can operate for five years or longer, and thus take advantage of a standard depreciation cycle.

Whether HPC-as-a-service becomes more widespread remains to be seen. Not every customer feels the need to increase HPC capacity at the rate Airbus does, nor does every company buy enough HPC equipment to make a service contract a viable option. But at least for Airbus, they seem to have found the financial model and the type of system that makes sense for them.