On December 5th, the research team at the National Ignition Facility (NIF) at Lawrence Livermore National Laboratory (LLNL) achieved a historic win in energy science: for the first time ever, more energy was produced by an artificial fusion reaction than was consumed – 3.15 megajoules (MJ) produced versus 2.05 megajoules in laser energy to cause the reaction. High-performance computing was key to this breakthrough (called “ignition”), and HPCwire recently had the chance to speak with Brian Spears, deputy lead modeler for inertial confinement fusion (ICF) at the NIF, about HPC’s role in making this fusion ignition a reality.

The Basics

Fusion energy has long been the Holy Grail of energy science, promising enormous amounts of zero-carbon, low-radioactivity nuclear energy – but, sadly, the precise and extreme conditions required to generate fusion reactions have, until now, proved prohibitive to producing net energy gains in a fusion reactor.

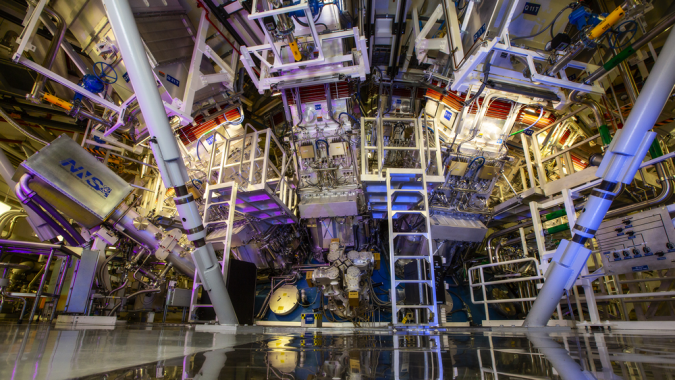

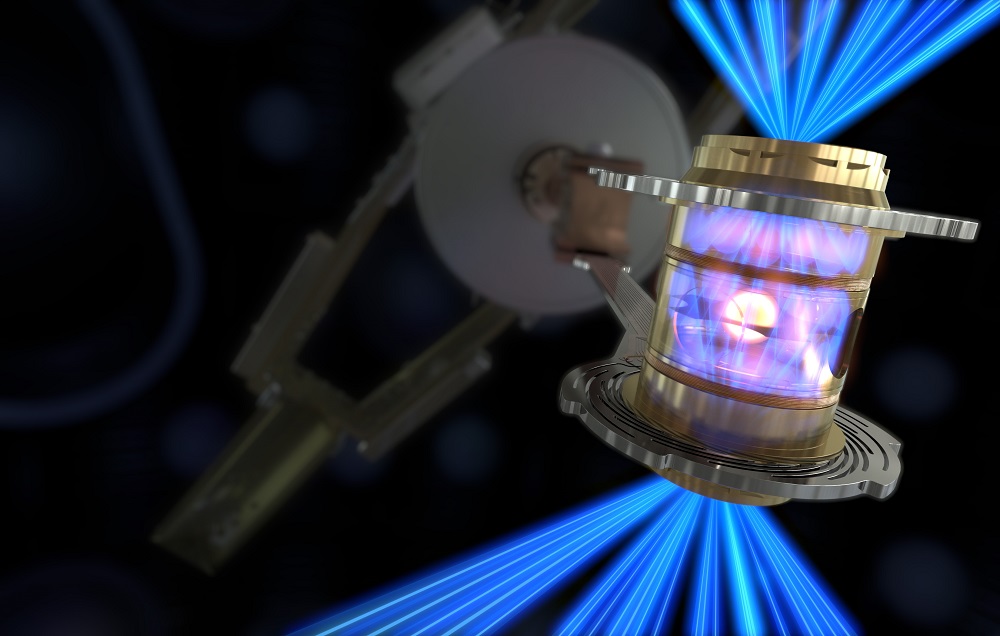

At the NIF – which supports the National Nuclear Security Administration’s Stockpile Stewardship Program – researchers are working on the laser indirect drive approach to inertial confinement fusion. Spears explained the basics: first, laser beams are fired into a small gold can called a hohlraum (German for “empty space”). In the hohlraum, the lasers create a bath of x-rays, which cooks a target capsule made of ultrananocrystalline diamond. Inside that capsule: frozen deuterium and tritium. When the x-rays heat up the capsule enough, it explodes, shoving the nuclear fuel inside to extremely high temperatures and densities, which causes fusion to occur and produces substantial energy. The target chamber for the NIF is pictured in the header.

The processes involved in performing these tests are mind-boggling. “We have something called cross-beam energy transfer that is literally what happens in Ghostbusters,” Spears said (referring to how, in the movie Ghostbusters, the titular Ghostbusters are warned of the dangers of crossing the streams of their proton packs). “We have two cones of lasers, some that come in closer to the top of the capsule and some further away, and when those two beams cross, laser power is actually transferred from one set of beams to the other, and we use that to control the asymmetry of the implosion so we don’t push one too hard on one side.”

The target is also the subject of exacting precision. “The outside of that capsule – the ablator – that explodes is polished to something like a hundred times smoother than a mirror, but it still has more defects than we’d like,” Spears said, explaining that these defects allow carbon to mix with the nuclear fuel, cooling the reaction. “This shot actually had a headwind of a capsule which was a little bit defective in our eyes. It’s still one of the most highly polished pieces of object that humans have ever made on the planet, but it wasn’t the best one we could make.”

The Methods

One of the issues that has hindered the development of fusion energy has been the difficulty and expense (and, as a result, the infrequency) of running each individual experiment. “Our laser can fire a couple of times a day,” Spears said. “These very large, demonstration-class experiments can happen once a week to once a month, so they’re pretty sparse in time.” At the NIF, Spears and his team apply HPC and AI to ensure that every time they fire the laser, they’re learning as much as they can from the results and using what they learn to refine subsequent experiments.

Spears said there were essentially two pieces to their predictive capability. First, their fundamental design capability – using hundreds of thousands of lines of radiation hydrodynamics code to run massive simulations of fusion reactions on leadership-class supercomputers. Second: cognitive simulation, or CogSim.

“We recognize that simulated prediction can sometimes be overly optimistic in the fusion world – which is why you’ve heard the line, maybe, that fusion is 30 years away and always will be,” Spears said – and this, he continued, is where CogSim helps out.

“What we call cognitive simulation is really the merging of simulation predictions with experimental reality that we’ve actually observed using AI,” he said. “What CogSim does is give us an assessment tool. Our standard, traditional HPC says, ‘Look, this is the way this implosion is going to work. If you do these things, it looks really nice.’ And then CogSim comes in and says, ‘Well, okay, but in the real world there are all these degradations that your design is going to have to contend with, and this is what it looks like when you actually field something at NIF, give me that new design and I’ll tell you whether it’s robust or not.’” (This isn’t the first time CogSim has hit our radar: ahead of the successful run, HPCwire awarded its 2022 Editors’ Choice Award for Best Use of HPC in Energy to LLNL and the NIF for their use of CogSim in fusion energy modeling.)

“We use almost all of our [HPC] resources across this process,” he said. The traditional design happens mostly on CPU-based systems – LLNL’s Intel-based Jade system, he added, is a workhorse for that segment of the research.

“When we transition into the cognitive simulation world, we use two supercomputers,” he continued. “One for capacity is the Trinity system at Los Alamos, so we use that quite heavily to do hundreds of thousands of rad-hydro predictions. When we actually do the machine learning piece, we sometimes put that on Sierra and use our largest system to do that. But we are pretty much on every machine that’s in our secure computing facility, both for capacity reasons and for the capabilities that the different machines bring.”

LLNL also has a Cerebras CS-2 system hooked up to its Lassen supercomputer, but Spears said that, while the CS-2 was used for research and development work and will be important for the next generation of research, it was not used principally in this work.

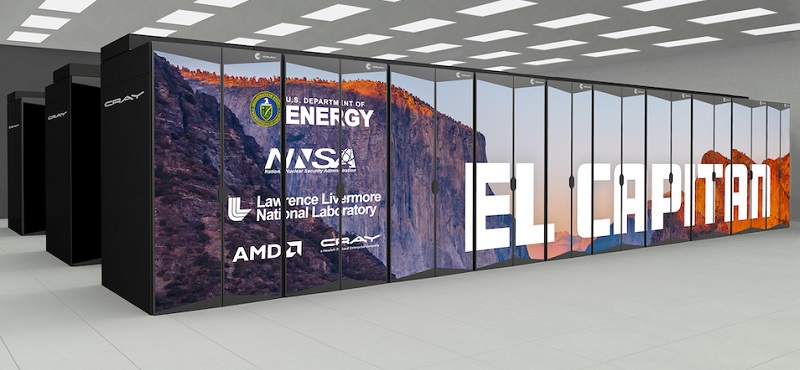

“We will use that kind of thinking developed [with the CS-2] for what we call Ice Cap, which is a project that we have built for when we take delivery of [the exascale supercomputer] El Capitan … in the next year,” Spears said. “So some of the techniques that are developed there are helping us understand how to move back and forth between high-precision workloads and low-precision machine learning workloads.”

The Shot

On September 19th, the team began putting more energy through the laser – something the computational work had indicated would help the experiment surmount the “ignition cliff” and reach the fabled “ignition plateau.” With that extra energy – the same 2.05 MJ put in during the December shot – the September shot was more successful, producing over 1 MJ of yield. However, it was hindered by an implosion that was “a little bit pancake-shaped,” so Spears and his colleagues asked the code: what would happen if they made it round by changing the way the power was distributed among the laser beams?

“The code said, ‘If you do that, you’re going to get two to five times more energy out than you did this time,’” Spears said. “And then we went through that CogSim loop of saying, ‘In the face of all the degradations, is that really true?’ And it looked like the answer was yes, and so we went pedal to the metal and did this.”

“We had gone through for a couple months with these traditional design tools and the answer looked pretty good. But I’ve been doing this for 18 years, and the answer has looked pretty good many times. With the CogSim tools, this was the first time that – by around Thanksgiving – we were combining the experiment with the simulation and we were getting things that looked qualitatively different from anything that we had seen before. And by the week before the shot, we had a very solid assessment that said more likely than not, this is going to exceed the amount of energy that goes into the target by laser. And that’s the first time we’d ever seen that number. Sometimes, in the previous shots before that, that number may have been 15% likely that that could happen … sometimes it was way, way, less than 15%. This time, it was over 50%.”

“We essentially, from the prediction side, called that shot, and said: hey, look, based on HPC, combined with experiments and a little AI magic thrown in, this looks really good,” he said. “I hit send on that email to our senior management team with a little bit of trepidation, because after 18 years of that not happening, statistically speaking, it’s not all that likely to happen.”

But it did.

What’s Next for the NIF

“There are insights that have come out of this,” Spears said. “We now understand very clearly the role that asymmetries play in our implosions. One of the principal things that we did was to use that ‘Ghostbuster effect’ – the cross-beam energy transfer – to make these implosions more spherical.” Spears added there would be four or five seminal papers emerging from the event, but cautioned that many of the other takeaways would be opaque to people who weren’t “deep, deep insiders.”

Of course, the path to scalable fusion – and even to radically improved experiments based on the successful shot – remains a long one. “The timescale is not short yet,” Spears said. “When we do an experiment, it takes us a few days in order to actually fully reduce the diagnostics and analyze them and understand what we saw. … Design ideas for that are probably in play within days to a week after we’ve seen the experiment. Then we go into a real production loop – but that loop is quite long, because to make our targets takes months and months and months, so if you look at an experiment and say, ‘Hey, look, I think we oughta thicken up the ablator just a little bit more’ – to build those capsules takes three months, and if you stick on the front of that a careful specification of that target of about a month, it’s hard for us to react much more quickly than six months.”

And, Spears said, don’t count on the successful shot being effortlessly reproduced. “We should point out, for the future – a little greater than 50% odds is still only a little better than a flip of a coin, right? This is not going to happen every time we pull the trigger on the laser, there are going to be things that happen and we’re going to learn from them just as we have for the last 18 years.”

Still, there are plenty of reasons for optimism in the wake of the successful shot.

“The next batch of shots will use targets that we’re pretty satisfied with so far, so we’re actually secretly even a little more optimistic for things in the future,” Spears said. “But it’s fusion, and stuff happens, so you never really know until you see the diagnostics the next morning.”

The researchers also expect the energy gains to scale dramatically with energy input. We expect it to be strongly nonlinear, and it will only get better as we build designs that accommodate the increase in energy,” Spears said. “For some perspective, between the last event and this one, we put in 8% more energy in the laser and we got 230% more energy out in fusion.”

The laser isn’t yet ready to be fired at that high intensity again, but Spears said that the next attempts to conduct large shots will likely be in January and February, then throughout the spring and summer. And, by next October, he expects the shots will be based largely on the lessons learned from the successful shot fired earlier this month.

“You’ll hear some splash again about ICF when we receive [El Capitan] and we essentially use more cycles than have ever been used in ICF to polish or refine one of these designs in a way that only El Cap can do it,” Spears added.

Spears also shared that the lab has been receiving an “overwhelming” amount of support in the wake of its win. “It’s hard not to be excited about getting up in the morning to go to work and do this,” he said.

The Future of Fusion Energy Research

Spears doesn’t think that the current workflow is how things will always be done, citing a self-driving laser project LLNL is working on with Colorado State University.

“There are laser systems that we’ve developed – both at Livermore and elsewhere – that can fire multiple times per second,” he said. Through a self-driving laser research loop, an experiment would be run and observed, and then a deep neural network surrogate would compare the experimental results to its predictions and intelligently choose the next experiment on the fly every few hundred milliseconds with no designer in the loop and an entire array of targets already available.

“This discovery loop that we’ve been talking about now becomes tightly closed,” Spears said. “These self-driving knowledge discovery loops – that’s the future. That’s the direction that we’re going. … This is making HPC a real-time commodity for experiments, and entirely blurring or erasing the boundary between HPC and experiment.”

“I expect that we’re going to see a petaflop-scale high-performance computer sitting in all of the experimental facilities, helping us run operations like this – but still calling home to enormous, El Capitan-sized computers for very high-precision stuff.”

Beyond Fusion Energy

The team’s methods are also useful for fields outside of fusion energy. For instance, Spears said, because the code they use is predictive enough for ICF, it also is good at understanding extreme ultraviolet lithography. “So it’s been a transformational capability in making semiconductors – which is kind of useful for doing HPC!” he said. “So in a nice virtuous cycle, we’ve made our computational capabilities so good that we can work with industry partners to predict better ways to make the semiconductors that we need to make better computers. It’s far away from ICF, but because it’s really extreme plasma conditions to do those things, we know how to do it and it has a nice benefit for us – and for your audience, too.”

CogSim is also finding uses in the life sciences, where researchers at LLNL applied it to Covid research last winter. “Our team used some of these CogSim [tools] to, over a Christmas sprint in two weeks, completely redesign an antibody for Covid-19,” Spears said. “By January of this year, just three weeks after that Christmas, those molecules were in trials with AstraZeneca.”