The pioneering “man-versus-machine” match on Jeopardy last night has a lot of people thinking, and writing, about artificial intelligence. Developing a machine capable of understanding the nuances of human language is one of the biggest computing challenges of our day. Natural language processing is a domain where most five-year-olds can outperform even the most powerful supercomputers. To illustrate, researchers often refer to the “Paris Hilton” problem. It is difficult for the machine to know whether the phrase refers to the celebrity or the hotel. IBM developed its Watson supercomputer to be able to handle these kinds of complex language processing tasks, to understand the finer points of human language, such as subtlety and irony.

Last night was Watson’s official debut in the first night of a two-game match. Ending in a tie, Watson has proven to be a worthy opponent, well-matched to human champions Ken Jennings and Brad Rutter. With “Paris Hilton” type problems, Watson would use additional clues (for example, if provided with the phrase “Paris Hilton reservation”) to distinguish the two meanings. Drawing from a vast knowledge databank, the supercomputer relies on its ability to link similar concepts to come up with several best guesses. Then it uses a ranking algorithm to select the answer with the highest probablity of correctness. Read more about what makes Watson tick, here and here.

Watson’s performance on Jeopardy signifies a big leap for the field of machine learning. The next big advance will come when Watson, or another, possibly similar, machine, beats the Turing test. A successful outcome occurs when, in the context of conversation, we can no longer tell the difference between a human and a computer. Some maintain that a machine cannot truely be considered intelligent until it has passed this litmus test, while others criticize the test for using too narrow a definition of intelligence.

Although the Jeopardy match could be considered mere entertainment, or clever marketing depending on how you look at it, there are commercial applications which IBM plans to develop in fields as diverse as healthcare, education, tech support, business intelligence, finance and security.

Turing test aside, Watson’s abilities speak to considerable progess on the AI front with real world implications. If you think of artificial intelligence as a continuum, let’s say a ladder, instead of a discrete step, then one of the bottom and more attainable wrungs could be labeled “intelligence augmentation” with the highest wrungs reserved for a true thinking machine, a la the Singularity theory, most commonly associated with futurist Ray Kurzweil.

Both of these concepts have received some attention recently with The New York Times’ John Markoff citing major developments in intelligence augmentation and a Time Magazine article providing a thorough treatment of the Singularity model.

According to Markoff, “Rapid progress in natural language processing is beginning to lead to a new wave of automation that promises to transform areas of the economy that have until now been untouched by technological change.”

Markoff cites personal computing as a major component in the rise of intelligence augmentation, in that it has provided humans with the tools for gathering, producing and sharing information and has pretty much single-handedly created a generation of knowledge workers.

The editor of the Yahoo home page, Katherine Ho, uses the power of computers to reorder articles to achieve maximum readership. With the help of specialized software, she can fine-tune the articles based on readers’ tastes and interests. Markoff refers to Ms. Ho as a “21st-century version of a traditional newspaper wire editor,” who instead of relying solely on instinct, bases decisions on computer-vetted information.

An example of computational support taken one step further is the Google site, which relies solely on machine knowledge to rank its search results. As Markoff notes, computational software can be used to extend the skills of a human worker, but it can also be used to replace the worker entirely.

Markoff concludes that “the real value of Watson may ultimately be in forcing society to consider where the line between human and machine should be drawn.”

The Singularity, the subject of the Time Magazine article, also deals with the dividing line between human and machine, but takes the idea quite a bit further. In his 2001 essay The Law of Accelerating Returns, Kurzweil defines the Singularity as “a technological change so rapid and profound it represents a rupture in the fabric of human history.” It can also be explained as the point in the future when technological advances begin to happen so rapidly that normal humans cannot keep pace, and are “cut out of the loop.” Kurzweil predicts this transformative event will occur by the year 2045, a mere 35 years away.

We don’t know what such a radical change would entail because by the very nature of the prediction, we’re not as smart as the future machines will be. But the article cites some of the many theories: Humankind could meld with machines to become super-intelligent cyborgs. We could use life science advances to delay the effects of old age and possibly cheat death indefinitely. We might be able to download our consciousness into software. Or perhaps the future computational network will turn on humanity, a la Skynet. As Lev Grossman, author of the Time Magazine article, writes, “the one thing all these theories have in common is the transformation of our species into something that is no longer recognizable” from our current vantage point.

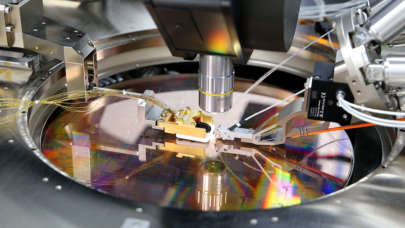

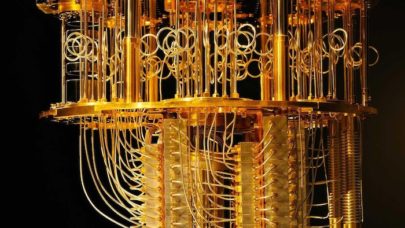

How can such an earth-shattering event possibly occur by the year 2045, you wonder. Well the simple answer is that computers are getting much faster, but more pertinently, the speed at which computers are getting faster is also increasing. Supercomputers, for example, are growing roughly one-thousand times more powerful every decade. Doing the math, we see a staggering trillion-fold increase in only 30 years. This is the law of accelerating returns in action, and this exponential technology explosion is what will propel the Singularity, according to its supporters.

This degree of computational progress is such that it’s almost impossible for the human mind to fully grasp; humans naturally intuit that the pace of change will continue at the current rate. We have evolved to accept linear advances or small degrees of exponential inclines, but the massive advances proposed by Singularity supporters truely boggle the mind. This is something Kurzweil is quick to point out in his books and his public speaking engagements.

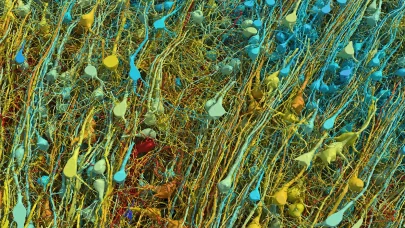

As for assertions that Kurzweil is underestimating the difficulty of the challenges involved with advanced machine intelligence, for example the complexity of reverse-engineering the human brain, Kurtzweil responds in kind, charging his critics with underestimating the power of exponential growth.

To the naysayers, Grossman responds:

You may reject every specific article of the Singularitarian charter, but you should admire Kurzweil for taking the future seriously. Singularitarianism is grounded in the idea that change is real and that humanity is in charge of its own fate and that history might not be as simple as one damn thing after another. Kurzweil likes to point out that your average cell phone is about a millionth the size of, a millionth the price of and a thousand times more powerful than the computer he had at MIT 40 years ago. Flip that forward 40 years and what does the world look like? If you really want to figure that out, you have to think very, very far outside the box. Or maybe you have to think further inside it than anyone ever has before.