The month of April 2018 saw four very important and interesting meetings to discuss the state of quantum computing technologies, their potential impacts, and the technology challenges ahead. These discussions happened in light of the recent announcement by China of a $10 billion investment to build a National Laboratory for Quantum Information Sciences that is planned to be open in 2020. Also, the discussions were in light of the Department of Energy’s (DOE) Fiscal Year 2019 (FY-19) budget request that includes a $105 million investment to conduct research in areas of quantum information sciences that include quantum computing and quantum sensor technology. The conversations involved a number of organizations and research activities being done by industry and government. The main message was that quantum computing has now passed the point of being just a “physicist’s dream” and is starting to address the hard challenges that make it an “engineer’s nightmare.”

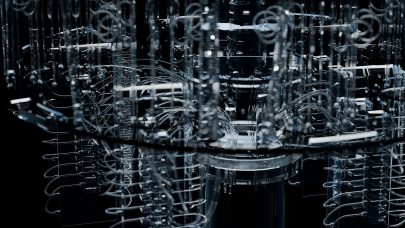

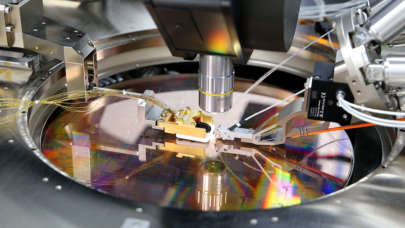

As a reminder, what makes quantum computing a physicist’s dream is its ability to tap into the quantum mechanical behaviors of superposition, tunneling, and the sometime called spooky behavior known as entanglement. The first advocate of quantum computing was Richard Feynman, a physicist, who speculated that the best way to simulate quantum mechanical behaviors was to use a quantum computer. However, there are huge engineering challenges of doing that and a number of different approaches have been proposed that include quantum-annealing, gate-model, and trapped ion. The engineering challenges involve the need to cool some types of quantum computing circuits to near absolute zero (actually colder than space) and the challenges of achieving and maintaining quantum coherence. There is a need to develop approaches to deal with questions about quantum computing programming models and data handling. As the April meetings revealed, promises of the quantum computing dream are great, but the engineering nightmares are many.

The first April meeting was held by D-Wave on the 5th. This event was the annual briefing done by D-Wave in Washington DC for government and industry leaders about the progress they have made in the past year. The big news that started the meeting was the announcement that in early 2018 that D-Wave has started testing of its 4,000 qubit system that will use a difference interconnection topology. The testing of the annealing style quantum computing is being done on a ~500 qubit system and that they are now in the process of growing that smaller configuration to the full 4,000 qubit size, which should be available in approximately 18 months.

The meeting also discussed the research being done at Los Alamos National Laboratory (LANL) on the D-Wave 1,000 qubit quantum computer located there. They reported that through the lab’s Rapid Response program, the computer was being used for the development of applications ranging from machine learning, to NP-Hard optimization problems, to uncertainty quantification and other applications (project summaries are available HERE). However, despite the optimistic tone, the D-Wave International Business President, Bo Ewald, reminded the group that we are still in the very early days of quantum computing. He said the state of technology is similar to where electronic computing was in the 1940s and 1950s. This was a sentiment repeated in all the other April quantum computing events.

The next important quantum computing event was held at the Council on Competitiveness’ Advanced Computing Roundtable (ACR) on April 11th. This group of industry, academia, government, and national lab leaders meet on a semi-annual basis to discuss the status of advanced computing technologies and its impact on national competitiveness. During the meeting there were a number of presentations on quantum computing. The first was given by Jerry Chow of the IBM T.J. Watson Research Center. Jerry provided great background on the topic, talked about its exciting prospects, and the challenges. He made a very interesting point that maximizing the potential of quantum computing will require finding the right balance of controllability, connectivity and coherence. He went on to suggest ways we might measure quantum computing capability and the ongoing work of IBM in this area. Finally, Jerry suggested that there should be a role for a National Quantum Initiatives to spearhead research in this area.

The next presentation was made by Rene’ Copeland, the President of D-Wave Government Inc. Copeland recapped the discussions from the previous week’s workshop. He also provided a bit more context of how the D-Wave annealing approach compares with other quantum computing approaches being used by Google, IBM, Intel, Rigetti, Microsoft and IonQ. Copeland made the point that despite the potential limitations of the D-Wave annealing approach, the computer was available and already being used by researchers to easily resolve previously extremely difficult problems.

The next presentation at the ACR meeting was made by Bob Sorenson of Hyperion Research. Sorenson talked about the new effort that Hyperion Research was undertaking in the area of quantum computing. He talked about how they would be taking a new approach that involves forming a panel of experts on activities in the U.S. and around the world. The Hyperion Research study will cover both the state of quantum computing technologies as well its use for modeling and simulation, artificial intelligence, machine learning, communications and other applications. During his presentation, Sorenson reported the result from their first survey and the overall impression he left was that there is a great deal of activity in the quantum computing space. However, he like the other presenters stressed that the technology is still very immature. Sorenson made the great point that while we may have a quantum equivalent to a transistor, we are still need to develop the quantum equivalents of networks, memory, and storage.

The final presentation at the Council’s ACR meeting was given by R. Paul Stimers of K&L Gates, a Washington, DC law firm. Stimers introduced the idea of building an industry coalition around quantum computing. This coalition would be used to create a unified business voice to promote U.S. leadership in all aspects of the technology. The main point of the coalition is to proactively deal with the challenges being presented by other countries. The coalition is expected to endorse the idea of a National Quantum Initiative to help ensure U.S. leadership by supporting the necessary R&D and use of the U.S. national laboratories to accelerate the deployment of the technology to address government and industry applications.

The next quantum computing discussions occurred on April 16th and 17th at the Hyperion Research HPC Users Forum that was held in Tucson, Ariz. While the previous quantum computing discussions took a U.S. centric perspective, the Hyperion Research presentations (available HERE) provided a much more global view. The presentations started with Bob Sorenson who again described the Hyperion Research quantum computing market research activities and the answers to the first survey they have conducted in this area. Based on the survey results it is clear that there is a lot of work happening in many organizations (both government and industry) and that the challenges are plentiful. Bob ended his presentation with the optimistic note that, “The systems we see now are not camera-ready, but they are critically important quantum algorithm research tools.”

The HPC User Forum then went on to a number of presentations on the topic. These included Xiaobo Zhu from the University of Science and Technology of China, Steve Reinheart of D-Wave, John Martinis of Google, Jim Held of Intel, Matthia Trover of Microsoft, TR Govindan of NASA and Blake Johnson of Rigetti. Each of the presenters provided their perspectives on the very exciting computing possibilities of quantum computing and realistic assessments of the challenges that must be overcome to realize that potential. The Hyperion Research survey captured a number of those challenges that include:

- Achieving and maintaining quantum coherence;

- Qubit connectivity;

- Error identification and correction;

- Lack of quantum algorithms and programming models;

- And many others.

However, despite these challenges, the presenters were very excited about the potential benefits and the opportunity to find innovative ways to overcome them.

The fourth quantum computing discussion in April occurred at the Department of Energy’s (DOE) Office of Science Advanced Scientific Computing Advisory Committee (ASCAC) meeting on the 18th. This meeting is held quarterly and provides an opportunity for the committee members and the public to understand what is happening in the Advanced Scientific Computing Research (ASCR) program. The meeting was kicked off by the DOE Under Secretary for Science Paul Dabbar who expressed his, Secretary Perry’s, and the White House’s excitement and support for the Department’s HPC activities. Most of Under Secretary’s remarks centered on exascale. However, he mentioned the recent FY-19 budget request of $105 million for quantum computing research at the fact that it was spread across all of the Office of Science programs. He explained that this was because the DOE appreciates that quantum computing is in its early days and that basic research is required everywhere from high energy physics to basic energy science to computer science.

During the ASCAC meeting, Joe Lykken of Fermilab made a presentation on Perspectives on Quantum Information Science (available HERE). The presentation was an interesting take on the opportunities and challenges of quantum computing from the perspective of a particle accelerator laboratory. Lykken explained the potential important role that could be played by superconducting microwave cavities to extend quantum coherence times. He went on to discuss the fact that Fermilab has been developing those cavities for its accelerators for decades and that there was good potential that this experience could be applicable to future quantum computing systems. He also explained the importance of quantum computing to Fermilab to help to decipher the mysteries of materials at their fundamental levels.

The month of April 2018 saw an amazing number of very important quantum computing meetings. The good thing about these meetings is that they provide some great examples of how quantum computing can be applied to solve previous unsolvable problems. However, despite that optimism there was also a very realistic understanding of the challenges that must be addressed. The discussions also made the impressive point about how industry and governments are willing to make significant commitments to developing useable quantum computing technologies. The number of different research activities and diversity of approaches is very impressive. There is no question – the quantum computing race is on!