Quantum computing is in the midst of the so-called NISQ era – a time of noisy intermediate scale quantum devices based a variety of qubit modalities, all of which are too error-prone for practical computing. While the qubit zoo keeps growing – superconducting, trapped ions, neutral atoms, photonics, spin qubits, and more – its members still share (at least so far) untenable error rates. You often hear that 1000 physical qubits could be required to build a single logical qubit, and that many 1000s of logical qubits will be needed to gain true quantum advantage.

The result, not surprisingly, is that error correction is considered the greatest near-term challenge facing quantum computing. Conquer it or fail. Now, a young French company, Alice and Bob, is betting big on a relatively new member of the qubit zoo, the Cat Qubit (yes, named for Schrödinger’s cat). It could be a game-changer, believes Alice and Bob, which recently demonstrated the ability to dramatically suppress bit-flips using its cat qubit technology.

The broad problem is qubit susceptibility to noise – even tiny environmental noises (thermal, EMI, etc.) cause qubits to loose coherence, the fragile quantum state that gives quantum computing its power. Noise is one reason most current quantum computing devices operate only in dilution refrigerators in attempt to isolate them from all interference. (Frigid temperature is also required to implement superconducting circuits needed to create superposition and entanglement for some types of qubits.)

Alice and Bob’s cat qubits take a different approach. Rather than isolate qubits from noise, it uses a two-photon injection scheme that maintains the system energy level and protects against decoherence. The result, according work described in a recent arXiv paper (Quantum control of a cat-qubit with bit-flip times exceeding ten seconds) posted two weeks ago, is dramatic protection against bit flips and a reduction in chip area required for implementation.

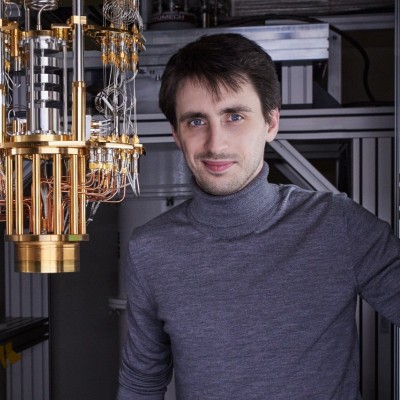

In a briefing with HPCwire, Théau Peronnin, Alice and Bob cofounder and CEO, said, “It’s a shift of paradigm [that’s] very profound compared to our competitors. Most of our competitors are trying to isolate as much as possible their qubits from their environments, to decouple them. We’re doing exactly the opposite. We’re going for a device that must be coupled as closely possible to the environment but through this very specific channel.”

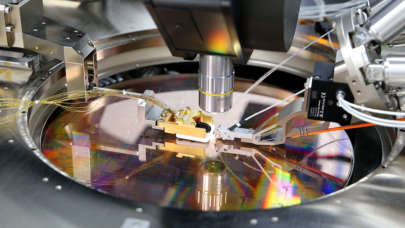

“We’re building on one of the most used platforms, which is superconducting circuits. We do nothing fancy in terms of materials or in terms of frequency. We operate in a fridge with a rack of control electronics nearby, and that means just like every other superconducting quantum computer. The main difference of what we do is the layout of the chip, the way we designed the chip, the way we encode quantum information, and the way we operate the device,” he said.

Founded three years ago, Alice and Bob, says Peronnin, has always focused on developing fault-tolerant technology versus chasing qubit-count. At the APS March meeting last year, the company showed progress on a four-cat-qubit device which served as the platform for its recent work demonstrating error suppression. The Alice and Bob roadmap calls for taping out a 14 cat-qubit device in 2024, which, says Peronnin is the number required to implement a logical qubit.

“The midterm target for us is to have 40-cat-qubit platform by 2026. And the goal of that platform will be to prove all the hypothesis of the architecture, showing how we do routing, how we do logical gates, basically just prove everything and let us develop the firmware and connect to the software stack,” he said.

It’s worth noting that others are also exploring cat qubits. AWS quantum, for example, has been looking at cat qubits for its quantum computer development effort. This AWS blog from 2021 (Designing a fault-tolerant quantum computer based on Schrödinger-cat qubits) is a pretty good primer on the technology. The broad concept, as mentioned before, is to use bias noise to maintain the cat qubit state.

Alice and Bob have demonstrated with their latest experiment a way to implement that with its two-photon injection system. Researchers were also able to remove the ancillary transmon read-out elements, further conserving chip real estate. It’s best to read the Alice and Bob work directly.

Here’s the latest paper’s abstract:

“Binary classical information is routinely encoded in the two metastable states of a dynamical system. Since these states may exhibit macroscopic lifetimes, the encoded information inherits a strong protection against bit-flips. A recent qubit – the cat-qubit – is encoded in the manifold of metastable states of a quantum dynamical system, thereby acquiring bit-flip protection. An outstanding challenge is to gain quantum control over such a system without breaking its protection. If this challenge is met, significant shortcuts in hardware overhead are forecast for quantum computing.

“In this experiment, we implement a cat-qubit with bit-flip times exceeding ten seconds. This is a four order of magnitude improvement over previous cat-qubit implementations, and six orders of magnitude enhancement over the single photon lifetime that compose this dynamical qubit. This was achieved by introducing a quantum tomography protocol that does not break bit-flip protection. We prepare and image quantum superposition states, and measure phase-flip times above 490 nanoseconds. Most importantly, we control the phase of these superpositions while maintaining the bit-flip time above ten seconds. This work demonstrates quantum operations that preserve macroscopic bit-flip times, a necessary step to scale these dynamical qubits into fully protected hardware-efficient architectures.”

Peronnin explained further, “The cat qubit thing really happens at the hardware level. It’s really a different layout and a different sort of physics at play. A typical superconducting qubit is just a two-level system. You have two possible states. In our case, what we’re using are called harmonic oscillators – antennas, if you want – that can have any number of microwave photons and we’re in the gigahertz frequencies. So the mathematical space behind it is much larger. We have much more room in terms of possible states, [there are] many more maths available.

“This, actually, is very fundamental because the whole point of error correction in general is to start from a large mathematical space and burn most of it to stabilize a sub-system of size two. What it takes to be able to do that kind of engineering is to start with a device that has such a large Hilbert space. You could actually do it with other types of technology. You could do it in the vibration mode of trapped ions, for example. You could do it with optical photons. The constraint or reason why it’s only done in superconducting circuits is it’s not just about encoding. It’s about how you do this stabilization. And stabilization is all about having found a way to inject energy to refuel continuously in energy [and] to extract entropy because when you’re fighting noise, you’re fighting entropy, without extracting information and without introducing decoherence,” said Peronnin.

Alice and Bob’s two-photon injection scheme is able to accomplish this refresh of energy without compromising coherence. The point of all this work, of course, is effective error suppression. Cat qubits suppress bit flips effectively but don’t do anything to control phase flips.

Peronnin, noted, “Most, if not all, of our competitors are aiming to correct errors through redundancy, just pure error correction code. But this requires a tremendous amount of redundancy. Just to put the numbers here. If you look at the previous state of the art of architecture to break RSA-2048, running Shor (Shor’s algorithm), it was a Google’s paper (2019) that said that it would take 20 million or 22 million physical qubits to do that, out of which only 0.05% would actually compute. 99.95% [of qubits] are there to correct for errors. So this is the level of overhead we’re dealing with,” said Peronnin.

“The cat qubit is designed to exponentially suppress one type of error (bit flip). And the exponential is very important. Because contrary to error mitigation, or error correction, which is just gaining a linear factor, you have to come up with an exponential solution to this exponential problem of errors. This is what the cat qubit does. We actually gain a square root factor on the on the overhead, meaning the number of physical qubits required to build a logical qubit, because we switch from a 2D error correction code to just a 1D error correction code. This is what we proved a few months ago, but it just ran in the peer review magazine, Physical Review Letters. Thanks to this first level of error correction our [scheme] would require 60 times fewer qubits than Google’s. So instead of 22 million we’d need around 360,000 cat qubits,” he said.

Those are impressive improvement numbers. But it must also be said that technology (software and hardware) moves fast in the quantum community. New and improved approaches keep appearing. Researchers from China, for example, reported late last year that they were able to crack RSA-248 with a system using 372 physical qubits using “a universal quantum algorithm for integer factorization by combining the classical lattice reduction with a quantum approximate optimization algorithm (QAOA).”

The end-game for the physical-to-logical-qubit ratio is a moving target and shrinking, even as Alice and Bob’s cat qubit progress demonstrates.

Alice and Bob must now turn its theory and early demonstration into bigger systems that potential users can experiment with. Alice and Bob started with two people three years ago, has grown to ~80 staff today, and expects to double headcount in 18 months.

Peronnin said, “We know that we’re on path where we have to prove the hypotheses and the tech building blocks of the architecture. What we did with the latest two papers is part of that journey; it proves we can push this bit flip suppression, autonomous correction, and that we can do fast, single-qubit gates. We haven’t published yet the results on our two-qubit gates, but we will soon. Then we will publish on the four-qubit device, on error detection at that point, and then on the 14-qubit [device] also on the error of correction. Then the challenge is implementing the default logical gate.”

Getting from here to there is still distant. As young quantum computing companies go, Alice and Bob’s offering is both nascent and modest when compared with IBM, Rigetti, D-Wave, IonQ, QuEra, etc. But the problem – error suppression – cat qubits tackle is a game-changer and once Alice and Bob’s full system is running, scaling it up may happen more quickly. There are still many challenges shared with everyone else – developing effective quantum memory and networking, for example.

Peronnin said the tools that users will need are the same generally-available tools for gate-based quantum computing. Alice and Bob will handle the underlying characteristics of the cat qubits, and this is broadly the approach being taken by all vendors developing quantum computers.

Recently, former Atos CEO Elie Girard joined Alice and Bob as executive chairman, and Alice and Bob has joined the Atos/Eviden Captiva partners. “Here, we need to do some advanced education about the peculiarities of cat qubits. Like every, deep-tech startup, we need buy-in on the roadmap and to make our milestones as understandable as possible,” said Peronnin. “The goal will be to distribute our computing time, so really [that’s] access to a machine, first a single qubit and then more as we move along our roadmap.”

Early user access to Alice and Bob’s QPU will be through Atos/Eviden. Later this year, the company will add other cloud providers said Peronnin. This early access will be to just one cat qubit – “There has been so much hype, so much noise in this space, and people claiming all sorts of things. We want to just give direct access to try it out,” he said.

Today there are many qubit technologies vying for sway. Superconducting-based qubits are the most developed and widely-used. Neutral atoms have recently become popular. The trapped ion crowd tout high qubit fidelity and long coherence times. Color vacancies in diamonds are also qubit candidates and being explored as quantum memory.

Moreover, cat qubits aren’t the only ones to hold promise for effective error suppression. Topological qubits, based on the Marjorana particle, are also thought to be game-changers – if they can actually be implemented. Microsoft and the Quantum Science Center at ORNL are independently researching topological qubits. Quantinuum, a trapped ion qubit specialist, also reported progress on creating a topological state.

The game is afoot.

Peronnin said, “I have trouble imagining long-term, several qubit types remaining prominent. In 15 years, I doubt there will be several because at some point, one is going to emerge as better and the financing is going to focus on that. When we’ll see this start to happen? I don’t think soon because currently every platform has pros and cons. Obviously, I think superconductors are much better than everything else. [That said], the neutral atoms are best for the noisy intermediate scale era that’s what we’re in at the moment. But they, they will have a very hard time doing quantum error correction later on. They have a prime time now. It’s going to be fun to watch.”

Stay tuned.

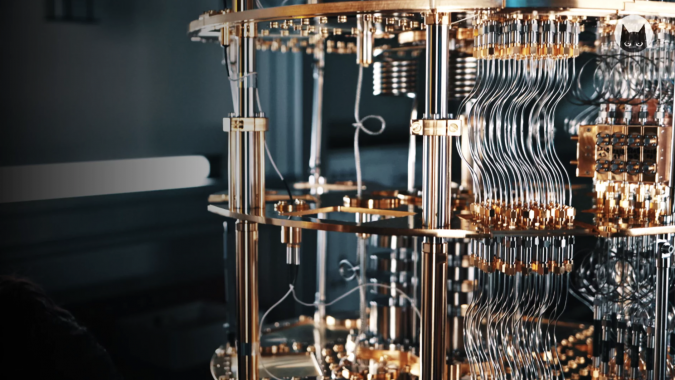

Top image: Detail of a Bluefors XLD Cryostat In Alice and Bob’s Paris Lab. Credit: Alice and Bob