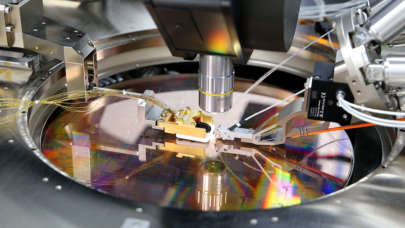

A team of researchers at the University of California, San Diego (UCSD) has built a solid state storage system that they claim outperforms state-of-the-art flash memory products. The new system, know as Moneta, uses phase change memory, a technology that some predict will replace the NAND flash memory used in nearly every solid state drive (SSD) today. The UCSD prototype will be on display this week at the Design Automation Conference in San Diego.

Moneta, which is the Latin name for the goddess of memory, uses phase change memory (PCM) module from Micron Technology as the storage medium and employs Xilinx FPGAs as memory controllers. The system DIMM, referred to as Onyx, is a 640 MB device made up of 40 of Micron’s 16 MB PCM (P8P) chips.* The entire system houses 16 of these DIMMs, yielding 10 GB of raw storage: 8 GB usable and 2 GB for error correction and metadata. The card connects to a Linux host via a PCIe x8 link. The prototype and its performance characteristics are described in detail here.

Moneta, which is the Latin name for the goddess of memory, uses phase change memory (PCM) module from Micron Technology as the storage medium and employs Xilinx FPGAs as memory controllers. The system DIMM, referred to as Onyx, is a 640 MB device made up of 40 of Micron’s 16 MB PCM (P8P) chips.* The entire system houses 16 of these DIMMs, yielding 10 GB of raw storage: 8 GB usable and 2 GB for error correction and metadata. The card connects to a Linux host via a PCIe x8 link. The prototype and its performance characteristics are described in detail here.

Like NAND flash memory, phase change memory is non-volatile, but it’s a total different digital animal. PCM is based on a metal alloy called chalcogenide and works, as its name suggests, by changing the state of the alloy. To write data, electrical current is applied to generate heat, which switches the alloy between its crystalline and its amorphous state. To read the data back, a smaller current is applied to determine which state the alloy is in.

PCM has several significant advantages over NAND. To begin with, it is inherently more robust than NAND, having an average endurance of 100,000,000 write cycles as compared to 100,000 for enterprise-class SLC NAND. That means the wear leveling algorithm can be much simpler, resulting in less software and memory overhead. PCM is also byte addressable, which means it is much more efficient than NAND at accessing smaller chunks of data, again reducing system complexity. Finally, PCM is just faster. The bits can be switched quickly, and can be flipped in place, avoiding the laborious block erase-and-write cycle required by NAND.

A longer term advantage cited for PCM is that it will scale better than NAND (or even DRAM) as process geometries shrink below 20nm. This has yet to be proven, but it has certainly encouraged companies like Micron and Intel to invest heavily in the technology.

The PCM hardware is only half of the Moneta story though. The system’s real value is contained in the software, which has been tuned for the higher performing PCM. Specifically, they developed a low-level block driver for Moneta that bypasses the Linux I/O scheduler, such that throughput and latency are optimized.

“We’ve found that you can build a much faster storage device, but in order to really make use of it, you have to change the software that manages it as well. Storage systems have evolved over the last 40 years to cater to disks, and disks are very, very slow,” said Steven Swanson, professor of Computer Science and Engineering at UCSD and director of its Non-Volatile Systems Lab (NVSL). “Designing storage systems that can fully leverage technologies like PCM requires rethinking almost every aspect of how a computer system’s software manages and accesses storage. Moneta gives us a window into the future of what computer storage systems are going to look like, and gives us the opportunity now to rethink how we design computer systems in response.”

To prove the technology’s worth, the UCSD team compared Moneta to a well known NAND flash product, in this case, an 80GB Fusion-io ioDrive. However, being a first-generation prototype, the results are somewhat of a mixed bag.

For 512-byte block accesses, Moneta can read data at 327 MB/sec and write at 91 MB/sec; which is between two and seven times faster than the Fusion-io ioDrive. For large data blocks (32K and up), Moneta can read data at 1.1 GB/sec and write data at 371 MB/sec. Here the PCM system is still outrunning the ioDrive for reads, but is actually somewhat slower in writes. The researchers attribute this to the ioDrive’s better aggregate bandwidth associated with its larger memory capacity, all of which is optimized for large block sizes.

Keep in mind that Fusion-io also offers PCIe flash devices with much more capacity and associated bandwidth than the version the UCSD researchers benchmarked. For example, Fusion-io claims 1.5 GB/sec in both reads and writes for its 320 GB ioDrive Duo with 64K block sizes, and 6.0 GB/sec in reads and 4.4 GB/sec in writes for its giant 5TB ioDrive Octal. Obviously size matters.

Other NAND SSD vendors are pushing the capacity/bandwidth envelop as well. Micron’s 700GB P320h flash drive delivers 3 GB/sec for reading and 2 GB/sec for writing (128K blocks); the latest 900GB RamSan-70 card for Texas Memory Systems manages 2 GB/sec reads and 1.4 GB/sec writes; and Virident Systems’ 800GB tachIOn drive tops out at 1.44 GB/sec and 1.2 GB/sec for reads and writes, respectively.

Of course, I/O bandwidth is just a single criteria. Performance under various loads and conditions (like when a drive becomes full) is also important. Cost, latency, longevity, power consumption are other factors that need to be considered.

In the case of power consumption, the UCSD researchers point out that because of the less idiosyncratic behavior of PCM compared to NAND, the software driver is simpler, thus there is a reduction in CPU usage. The researchers say that their Moneta prototype spends between 20 and 50 percent less CPU time performing I/O operations for small requests. That frees up the CPU to do application work and reduces the overall power consumption of the storage system.

The bottom line is that if Moneta can be scaled up to larger memory capacities and aggregate bandwidth on a single PCIe device, its natural advantage in read performance, small (i.e., random) write performance, and endurance will give the NAND-based SSD makers something to think about. The UCSD researchers contend that a PCM-based storage system will be of particular value in applications like high-performance caching systems and key-value stores, that require high performance reads and small writes. For example, applications that do a lot of random I/O, like large graph computations, are especially suited to Moneta-type architectures.

The UCSD team plans to upgrade Moneta in the next six to nine months, using denser and higher performing PCM devices. No PCM roadmap from Micron or anyone else has been made public, but the researchers say that the Moneta technology could be commercially available in just a few years.

*The original version of this story erroneously reported the DIMM and P8P storage specifications in GB rather than MB. –Editor