IBM today announced its Q (quantum) Network community had grown to 100-plus – Delta Airlines and Los Alamos National Laboratory are among most recent additions – and that an IBM quantum computer had achieved a quantum volume (QV) benchmark of 32 in keeping with plans to double QV yearly. IBM also showcased POC work with Daimler using a quantum computer to tackle materials research in battery development.

Perhaps surprisingly the news was released at the 2020 Consumer Electronics Show taking place in Las Vegas this week – “Very few ‘consumers’ will ever buy a quantum computer,” agreed IBM’s Jeff Welser in a pre-briefing with HPCwire.

That said, CES has broadened its technology compass in recent years and Delta CEO Ed Bastian delivered the opening keynote touching upon technology’s role in transforming the travel and the travel experience. Quantum computing, for example, holds promise for a wide range of relevant optimization problems such as traffic control and logistics. “We’re excited to explore how quantum computing can be applied to address challenges across the day of travel,” said Rahul Samant, Delta’s CIO, in the official IBM announcement.

IBM’s CES quantum splash was mostly about demonstrating the diverse and growing interest in quantum computing (QC) by companies. “Many of our clients are consumer companies themselves who are utilizing these systems within the Q network,” said Welser, who wears a number of hats for IBM Research, including VP of exploratory science and lab director of the Almaden Lab. “Think about the many companies who are trying to use quantum technology to come up with new materials that will make big changes in future consumer electronics,” said Welser.

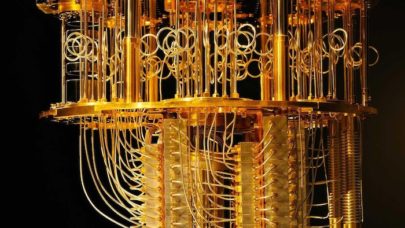

Since its launch in 2016, IBM has aggressively sought to grow the IBM Q network and its available resources. IBM now has a portfolio of 15 quantum computers, ranging in size from 53-qubits down to a single-qubit ‘system’ as well as extensive quantum simulator capabilities. Last year IBM introduced Quantum Volume, a new metric for benchmarking QC progress, and suggested others should adopt it. QV is composite measure encompassing many attributes – gate fidelity, noise, coherence times, and more – not just qubit count; so far QV’s industry-wide traction has seemed limited.

Welser emphasized IBM Q Network membership has steadily grown and now spans multiple industries including airline, automotive, banking and finance, energy, insurance, materials and electronics. Newest large commercial members include Anthem, Delta, Goldman Sachs, Wells Fargo and Woodside Energy. New academia/government members include Georgia Institute of Technology and LANL. (A list and brief description of new members is at the end of the article.)

“IBM’s focus, since we put the very first quantum computer on the cloud in 2016, has been to move quantum computing beyond isolated lab experiments conducted by a handful of organizations, into the hands of tens of thousands of users,” said Dario Gil, director of IBM Research in the official announcement. “We believe a clear advantage will be awarded to early adopters in the era of quantum computing and with partners like Delta, we’re already making significant progress on that mission.”

IBM’s achievement of a QV score of 32 and the recent Daimler work are also significant. When IBM introduced QV concept broadly at the American Physical Society meeting last March, it had achieved a QV score of 16 on its fourth generation 20-qubit system. At that time IBM likened QV to the Linpack benchmark used in HPC and calling it suitable for comparing diverse quantum computing systems. Translating QV into a specific target score that will be indicative of being able to solve real-world problems is still mostly guesswork; indeed different QV-ratings may be adequate for different applications.

IBM issued a blog today discussing the latest QV showing, which was achieved on a new 28-qubit system named Raleigh. IBM also elaborated somewhat on internal practices and timetable expectations.

Writing in the blog, IBM quantum researchers Jerry Chow and Jay Gambetta note, “Since we deployed our first system with five qubits in 2016, we have progressed to a family of 16-qubit systems, 20-qubit systems, and (most recently) the first 53-qubit system. Within these families of systems, roughly demarcated by the number of qubits (internally we code-name the individual systems by city names, and the development threads as different birds), we have chosen a few to drive generations of learning cycles (Canary, Albatross, Penguin, and Hummingbird).”

It gets a bit confusing and best to consult the blog directly for a discussion of error mitigation efforts among the different IBM systems. Each system undergoes revision to improve and experiment with topology and error mitigation strategies.

Chow and Gambetta write, “We can look at the specific case for our 20-qubit systems (internally referred to as Penguin), shown in this figure:

“Shown in the plots are the distributions of CNOT errors across all of the 20-qubit systems that have been deployed, to date. We can point to four distinct revisions of changes that we have integrated into these systems, from varying underlying physical device elements, to altering the connectivity and coupling configuration of the underlying qubits. Overall, the results are striking and visually beautiful, taking what was a wide distribution of errors down to a narrow set, all centered around ~1-2% for the Boeblingen system. Looking back at the original 5-qubit systems (called Canary), we are also able to see significant learning driven into the devices.”

Looking at the evolution of quantum computing by decade IBM says:

- 1990s: fundamental theoretical concepts showed the potential of quantum computing

- 2000s: experiments with qubits and multi-qubit gates demonstrated quantum computing could be possible

- And the decade we just completed, the 2010s: evolution from gates to architectures and cloud access, revealing a path to a real demand for quantum computing systems

“So where does that put us with the 2020s? The next ten years will be the decade of quantum systems, and the emergence of a real hardware ecosystem that will provide the foundation for improving coherence, gates, stability, cryogenics components, integration, and packaging,” write Chow and Gambetta. “Only with a systems development mindset will we as a community see quantum advantage in the 2020s.”

On the application development front, the IBM-Daimler work is interesting. A blog describing the work was posted today by Jeannette Garcia (global lead for quantum applications in quantum chemistry, IBM). She is also an author on the paper (Quantum Chemistry Simulations of Dominant Products in Lithium-Sulfur Batteries). She framed the challenge nicely in the blogpost:

“Today’s supercomputers can simulate fairly simple molecules, but when researchers try to develop novel, complex compounds for better batteries and life-saving drugs, traditional computers can no longer maintain the accuracy they have at smaller scales. The solution has typically been to model experimental observations from the lab and then test the theory.

“The largest chemical problems researchers have been so far able to simulate classically, meaning on a standard computer, by exact diagonalization (or FCI, full configuration interaction) comprise around 22 electrons and 22 orbitals, the size of an active space in the pentacene molecule. For reference, a single FCI iteration for pentacene takes ~1.17 hours on ~4096 processors and a full calculation would be expected to take around nine days.

“For any larger chemical problem, exact calculations become prohibitively slow and memory-consuming, so that approximation schemes need to be introduced in classical simulations, which are not guaranteed to be accurate and affordable for all chemical problems. It’s important to note that reasonably accurate approximations to classical FCI approaches also continue to evolve and is an active area of research, so we can expect that accurate approximations to classical FCI calculations will also continue to improve over time.”

IBM and Daimler researchers, building on earlier algorithm development work, were able to simulate dipole moment of three lithium-containing molecules, “which brings us one step closer the next-generation lithium sulfur (Li-S) batteries that would be more powerful, longer lasting and cheaper than today’s widely used lithium ion batteries.”

Garcia writes, “We have simulated the ground state energies and the dipole moments of the molecules that could form in lithium-sulfur batteries during operation: lithium hydride (LiH), hydrogen sulfide (H2S), lithium hydrogen sulfide (LiSH), and the desired product, lithium sulfide (Li2S). In addition, and for the first time ever on quantum hardware, we demonstrated that we can calculate the dipole moment for LiH using 4 qubits on IBM Q Valencia, a premium-access 5-qubit quantum computer.”

She notes Daimler hope that quantum computers will eventually help them design next-generation lithium-sulfur batteries, because they have the potential to compute and precisely simulate their fundamental behavior. Current QCs are too noisy and limited in size but the POC work is promising. It also represents a specific, real-world opportunity.

Link to IBM blog: https://www.ibm.com/blogs/research/2020/01/quantum-volume-32/

Link to Daimler paper: https://arxiv.org/abs/2001.01120

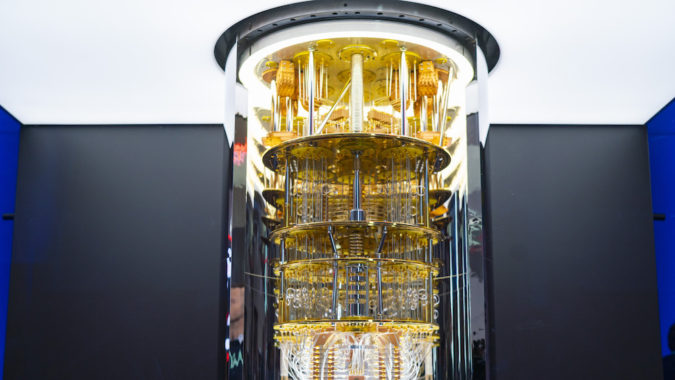

Feature image: Photo of the IBM System One quantum computer being shown at CES. Source: IBM

List of IBM Q New Members Excerpted from the Release (unedited)

Commercial organizations:

- Anthem: Anthem is a leading health benefits company and will be expanding its research and development efforts to explore how quantum computing may further enhance the consumer healthcare experience. Anthem brings its expertise in working with healthcare data to the Q Network. This technology also has the potential to help individuals lead healthier lives in a number of ways, such as helping in the development of more accurate and personalized treatment options and improving the prediction of health conditions.

- Delta Air Lines: The global airline has agreed to join the IBM Q Hub at North Carolina State University. They are the first airline to embark on a multi-year collaborative effort with IBM to explore the potential capabilities of quantum computing to transform experiences for customers and employees and address challenges across the day of travel.

Academic institutions and government research labs:

- Georgia Tech: The university has agreed to join the IBM Q Hub at the Oak Ridge National Laboratory to advance the fundamental research and use of quantum computing in building software infrastructure to make it easier to operate quantum machines, and developing specialized error mitigation techniques. Access to IBM Q commercial systems will also allow Georgia Tech researchers to better understand the error patterns in existing quantum computers, which can help with developing the architecture for future machines.

- Los Alamos National Laboratory: Joining as an IBM Q Hub will greatly help the Los Alamos National Laboratory research efforts in several directions, including developing and testing near-term quantum algorithms and formulating strategies for mitigating errors on quantum computers. The 53-qubit system will also allow Los Alamos to benchmark the abilities to perform quantum simulations on real quantum hardware and perhaps to push beyond the limits of classical computing. Finally, the IBM Q Network will be a tremendous educational tool, giving students a rare opportunity to develop innovative research projects in the Los Alamos Quantum Computing Summer School.

Startups:

- AIQTECH: Based in Toronto, AiQ is an artificial intelligence software enterprise set to unleash the power of AI to “learn” complex systems. In particular, it provides a platform to characterize and optimize quantum hardware, algorithms, and simulations in real time. This collaboration with the IBM Q Network provides a unique opportunity to expand AiQ’s software backends from quantum simulation to quantum control and contribute to the advancement of the field.

- BEIT: The Kraków, Poland-based startup is hardware-agnostic, specializing in solving hard problems with quantum-inspired hardware while preparing the solutions for the proper quantum hardware, when it becomes available. Their goal is to attain super-polynomial speedups over classical counterparts with quantum algorithms via exploitation of problem structure.

- Quantum Machines: QM is a provider of control and operating systems for quantum computers, with customers among the leading players in the field, including multinational corporations, academic institutions, start-ups and national research labs. As part of the IBM and QM collaboration, a compiler between IBM’s quantum computing programming languages, and those of QM is being developed and offered to QM’s customers. Such development will lead to the increased adoption of IBM’s open-sourced programming languages across the industry.

- TradeTeq: TradeTeq is the first electronic trading platform for the institutional trade finance market. With teams in London, Singapore, and Vietnam, TradeTeq is using AI for private credit risk assessment and portfolio optimization. TradeTeq is collaborating with leading universities around the globe to build the next generation of machine learning and optimization models, and is advancing the use of quantum machine learning to build models for better credit, investment and portfolio decisions.

- Zurich Instruments: Zurich Instruments is a test and measurement company based in Zurich, Switzerland, with the mission to progress science and help build the quantum computer. It is developing state-of-the-art control electronics for quantum computers, and now offers the first commercial Quantum Computing Control System linking high-level quantum algorithms with the physical qubit implementation. It brings together the instrumentation required for quantum computers from a few qubits to 100 qubits. They will work on the integration of IBM Q technology with the companies’ own electronics to ensure reliable control and measurement of a quantum device while providing a clean software interface to the next higher level in the stack.”