Editors Note: Users often ask “What separates HPC from AI, they both do a lot of number crunching?” While this statement is true, one big difference is the precision required for a valid answer. HPC often requires the highest possible precision (i.e. 64-bit double precision floating point), while many AI applications actually work with 8-bit integers or floating point numbers. The use of less precision often allows faster CPU/GPU mathematics and a “good enough” result for many AI applications. The following article explains the trend toward lower precision computing in AI.

A grand competition of numerical representation is shaping up as some companies promote floating point data types in deep learning, while others champion integer data types.

Artificial Intelligence Is Growing In Popularity And Cost

Artificial intelligence (AI) is proliferating into every corner of our lives. The demand for products and services powered by AI algorithms has skyrocketed alongside the popularity of large language models (LLMs) like ChatGPT, and image generation models like Stable Diffusion. With this increase in popularity, however, comes an increase in scrutiny over the computational and environmental costs of AI, and particularly the subfield of deep learning.

The primary factors influencing the costs of deep learning are the size and structure of the deep learning model, the processor it is running on, and the numerical representation of the data. State-of-the-art models have been growing in size for years now, with the compute requirements doubling every 6-10 months [1] for the last decade. Processor compute power has increased as well, but not nearly fast enough to keep up with the growing costs of the latest AI models. This has led researchers to delve deeper into numerical representation in attempts to reduce the cost of AI. Choosing the right numerical representation, or data type, has incredible implications on the power consumption, accuracy, and throughput of a given model. There is, however, no singular answer to which data type is best for AI. Data type requirements vary between the two distinct phases of deep learning: the initial training phase and the subsequent inference phase.

Finding the Sweet Spot: Bit by Bit

When it comes to increasing AI efficiency, the method of first resort is quantization of the data type. Quantization reduces the number of bits required to represent the weights of a network. Reducing the number of bits not only makes the model smaller, but reduces the total computation time, and thus reduces the power required to do the computations. This is an essential technique for those pursuing efficient AI.

AI models are typically trained using single precision 32-bit floating point (FP32) data types. It was found, however, that all 32 bits aren’t always needed to maintain accuracy. Attempts at training models using half precision 16-bit floating point (FP16) data types showed early success, and the race to find the minimum number of bits that maintains accuracy was on. Google came out with their 16-bit brain float (BF16), and models being primed for inference were often quantized to 8-bit floating point (FP8) and integer (INT8) data types. There are two primary approaches to quantizing a neural network: Post-Training Quantization (PTQ) and Quantization-Aware Training (QAT). Both methods aim to reduce the numerical precision of the model to improve computational efficiency, memory footprint, and energy consumption, but they differ in how and when the quantization is applied, and the resulting accuracy.

Post-Training Quantization (PTQ) occurs after training a model with higher-precision representations (e.g., FP32 or FP16). It converts the model’s weights and activations to lower-precision formats (e.g., FP8 or INT8). Although simple to implement, PTQ can result in significant accuracy loss, particularly in low-precision formats, as the model isn’t trained to handle quantization errors. Quantization-Aware Training (QAT) incorporates quantization during training, allowing the model to adapt to reduced numerical precision. Forward and backward passes simulate quantized operations, computing gradients concerning quantized weights and activations. Although QAT generally yields better model accuracy than PTQ, it requires training process modifications and can be more complex to implement.

The 8-bit Debate

The 8-bit Debate

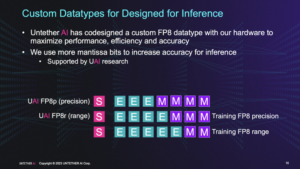

The AI industry has begun coalescing around two preferred candidates for quantized data types: INT8 and FP8. Every hardware vendor seems to have taken a side. In mid 2022, a paper by Graphcore and AMD[2] floated the idea of an IEEE standard FP8 datatype. A subsequent joint paper with a similar proposal from Intel, Nvidia, and Arm[3] followed shortly. Other AI hardware vendors like Qualcomm[4, 5] and Untether AI[6] also wrote papers promoting FP8 and reviewing its merits versus INT8. But the debate is far from settled. While there is no singular answer for which data type is best for AI in general, there are superior and inferior data types when it comes to various AI processors and model architectures with specific performance and accuracy requirements.

Integer Versus Floating Point

Floating point and integer data types are two ways to represent and store numerical values in computer memory. There are a few key differences between the two formats that translate to advantages and disadvantages for various neural networks in training and inference.

The differences all stem from their representation. Floating point data types are used to represent real numbers, which include both integers and fractions. These numbers can be represented in scientific notation, with a base (mantissa) and an exponent.

On the other hand, integer data types are used to represent whole numbers (without fractions). The representations result in a very large difference in precision and dynamic range. Floating point numbers have a wider dynamic range then their integer counterparts. Integer numbers have a smaller range and can only represent whole numbers with a fixed level of precision.

Integer vs Floating Point for Training

In deep learning, the numerical representation requirements differ between the training and inference phases due to the unique computational demands and priorities of each stage. During the training phase, the primary focus is on updating the model’s parameters through iterative optimization, which typically necessitates higher dynamic range to ensure the accurate propagation of gradients and the convergence of the learning process. Consequently, floating-point representations, such as FP32, FP16, and even FP8 lately, should be employed during training to maintain sufficient dynamic range. On the other hand, the inference phase is concerned with the efficient evaluation of the trained model on new input data, where the priority shifts towards minimizing computational complexity, memory footprint, and energy consumption. In this context, lower-precision numerical representations, such as 8-bit integer (INT8) become an option in addition to FP8. The ultimate decision depends on the specific model and underlying hardware.

Integer vs Floating Point for Inference

The best data type for inference will vary depending on the application and the target hardware. Real-time and mobile inference services tend to use the smaller 8-bit data types to reduce memory footprint, compute time, and energy consumption while maintaining enough accuracy.

FP8 is growing increasingly popular, as every major hardware vendor and cloud service provider has addressed its use in deep learning. There are three primary flavors of FP8, defined by the ratio of exponents to mantissa. Having more exponents increases the dynamic range of a data type, so FP8 E3M4 consisting of 1 sign bit, 3 exponent bits, and 4 mantissa bits, has the smallest dynamic range of the bunch. This FP8 representation sacrifices range for precision by having more bits reserved for mantissa, which increases the accuracy. FP8 E4M3 has an extra exponent, and thus a greater range. FP8 E5M2 has the highest dynamic range of the trio, making it the preferred target for training, which requires greater dynamic range. Having a collection of FP8 representations allows for a tradeoff between dynamic range and precision, as some inference applications would benefit from the increased accuracy offered by an extra mantissa bit.

INT8, on the other hand, effectively has 1 sign bit, 1 exponent bit, and 6 mantissa bits. This sacrifices much of its dynamic range for precision. Whether or not this translates into better accuracy compared to FP8 depends on the AI model in question. And whether or not it translates into better power efficiency will depend on the underlying hardware. Research from Untether AI research[6] shows that FP8 outperforms INT8 in terms of accuracy, and for their hardware, performance and efficiency as well. Alternatively, Qualcomm research [5] had found that the accuracy gains of FP8 are not worth the loss of efficiency compared to INT8 in their hardware. Ultimately, the decision for which data type to select when quantizing for inference will often come down to what is best supported in hardware, as well as depending on the model itself.

References

[1] Compute Trends Across Three Eras Of Machine Learning, https://arxiv.org/pdf/2202.05924.pdf

[2] 8-bit Numerical Formats for Deep Neural Networks, https://arxiv.org/abs/2206.02915

[3] FP8 Formats for Deep Learning, https://arxiv.org/abs/2209.05433

[4] FP8 Quantization: The Power of the Exponent, https://arxiv.org/pdf/2208.09225.pdf

[5] FP8 verses INT8 for Efficient Deep Learning Inference, https://arxiv.org/abs/2303.17951

[6] FP8: Efficient AI Inference Using Custom 8-bit Floating Point Data Types, https://www.untether.ai/content-request-form-fp8-whitepaper

Waleed Atallah is a Product Manager responsible for silicon, boards, and systems at Untether AI. Currently, he is rolling out Untether AI’s second generation silicon product, the speedAI family of devices. He was previously a Product Manager at Intel, where he was responsible for high-end FPGAs with high bandwidth memory. His interests span all things compute efficiency, particularly the mapping of software to new hardware architectures. He received a B.S. degree in Electrical Engineering from UCLA.