Google Cloud Waives Cloud Exit Fees, Throws Down Gauntlet to AWS and Azure

In a bold move by the number three public cloud company yesterday, Google Cloud announced it will no longer charge customers extra to move their data out of its data centers, making it the first …

Nvidia Announces BlueField-3 GA, Oracle Cloud Is Early User

Nvidia today announced general availability for its BlueField-3 data processing unit (DPU) along with impressive early deployments including Oracle Cloud Infrastructure. First described in 2021 a …

Hyperion Paints a Positive Picture of the HPC Market

November 8, 2022

Return to normalcy is too strong, but the latest portrait of the HPC market presented by Hyperion Research yesterday is a positive one. Total 2022 HPC revenue ( Read more…

CEO Jack Hidary on SandboxAQ’s Ambitions and Near-term Milestones

October 18, 2022

Spun out from Google last March, SandboxAQ is a fascinating, well-funded start-up targeting the intersection of AI and quantum technology. “As the world enter Read more…

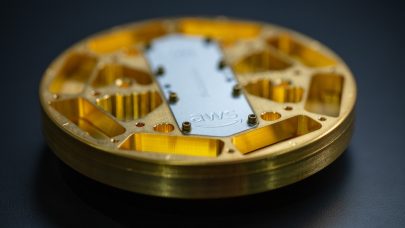

AWS Takes the Short and Long View of Quantum Computing

August 30, 2022

It is perhaps not surprising that the big cloud providers – a poor term really – have jumped into quantum computing. Amazon, Microsoft Azure, Google, and th Read more…

Lenovo Launches Its TruScale HPC as a Service Offering

January 26, 2022

Lenovo today announced TruScale High Performance Computing as a Service (HPCaaS), which it says will offer a “cloud-like experience” to HPC organizations of all sizes. The new HPC-as-a-Service is part of the TruScale portfolio that Lenovo launched in February 2019 and expanded last September. The aim, said Lenovo, is to enable end users... Read more…

SC21: Larry Smarr on The Rise of Supernetwork Data Intensive Computing

November 26, 2021

Larry Smarr, founding director of Calit2 (now Distinguished Professor Emeritus at the University of California San Diego) and the first director of NCSA, is one of the seminal figures in the U.S. supercomputing community. What began as a personal drive, shared by others, to spur the creation of supercomputers in the U.S. for scientific use, later expanded into a... Read more…

Nvidia Debuts Quantum-2 Networking Platform with NDR InfiniBand and BlueField-3 DPU

November 10, 2021

Nvidia yesterday introduced Quantum-2, its new networking platform that features NDR InfiniBand (400 Gbps) and Bluefield-3 DPU (data processing unit) capabilities. The name is perhaps confusing – it’s not a quantum computing device and even Nvidia is getting into the true quantum computing market with its cuQuantum simulator. The name stems from the legacy line of Nvidia/Mellanox Quantum switches. That said, the new Quantum-2 platform specs are impressive. Jensen Huang, Nvidia CEO, introduced... Read more…

Hot Chips: Here Come the DPUs and IPUs from Arm, Nvidia and Intel

August 25, 2021

The emergence of data processing units (DPU) and infrastructure processing units (IPU) as potentially important pieces in cloud and datacenter architectures was Read more…

Digging into the Atos-Nimbix Deal: Big US HPC and Global Cloud Aspirations. Look out HPE?

August 2, 2021

Behind Atos’s deal announced last week to acquire HPC-cloud specialist Nimbix are ramped-up plans to penetrate the U.S. HPC market and global expansion of its Read more…

- Click Here for More Headlines

Whitepaper

From Hallucination to Reality

As Federal agencies navigate an increasingly complex and data-driven world, learning how to get the most out of high-performance computing (HPC), artificial intelligence (AI), and machine learning (ML) technologies is imperative to their mission. These technologies can significantly improve efficiency and effectiveness and drive innovation to serve citizens' needs better. Implementing HPC and AI solutions in government can bring challenges and pain points like fragmented datasets, computational hurdles when training ML models, and ethical implications of AI-driven decision-making. Still, CTG Federal, Dell Technologies, and NVIDIA unite to unlock new possibilities and seamlessly integrate HPC capabilities into existing enterprise architectures. This integration empowers organizations to glean actionable insights, improve decision-making, and gain a competitive edge across various domains, from supply chain optimization to financial modeling and beyond.

Download Now

Sponsored by CGT Federal

Whitepaper

Why IT Must Have an Influential Role in Strategic Decisions About Sustainability

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by Lenovo

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

SUBSCRIBE for monthly job listings and articles on HPC careers.

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.