In April 2018, the U.S. Department of Energy announced plans to procure a trio of exascale supercomputers at a total cost of up to $1.8 billion dollars. Over the ensuing four years, many announcements were made, many deadlines were missed, and a pandemic threw the world into disarray. Now, at long last, HPE and Oak Ridge National Laboratory (ORNL) have announced that the first of those three systems is operational: the HPE-built, AMD-powered Frontier supercomputer, a behemoth system that delivers 1.102 Linpack exaflops of computing power.

The computer

Frontier consists of 74 cabinets, each weighing in at 8,000 pounds. 9,408 HPE Cray EX nodes are spread across these cabinets, with each node powered by an AMD “Trento” 7A53 Epyc CPU and quadruple AMD Instinct MI250X GPUs (totaling 37,632 GPUs across the system). The system has 9.2 petabytes of memory, split evenly between HBM and DDR4, and it uses HPE Slingshot 11 networking. It is supported by 37 petabytes of node-local storage on top of 716 petabytes of center-wide storage. The system is 100 percent liquid-cooled using warm (85°) water, and in terms of space, the final Frontier system occupies 372 square meters.

The 1.102 Linpack exaflops this hardware delivers easily earned it first place on the spring 2022 Top500 list (which was released today), and, in fact, the system is more powerful than the following seven Top500 systems combined. (For more on all of that, read HPCwire’s Top500 coverage.) Previously, Frontier had been characterized as a two peak exaflops system, but its first Top500 benchmark measures some 1.686 peak exaflops. (Oak Ridge said that there remains “much higher headroom on the GPUs and the CPUs” to achieve the two peak exaflops target.) Outside of Linpack and the Top500, the system benchmarks at 6.88 exaflops of mixed-precision performance on HPL-AI. The team ran out of time and was not able to submit an HPCG benchmark.

(There is, of course, an asterisk on Frontier’s debut as the first exascale system on the Top500: last year, details emerged of two operational exascale supercomputers in China: the OceanLight and Tianhe-3 systems. Those systems are not listed on the Top500.)

Unprecedented efficiency

Frontier also achieved another win out of the gate: second place on the spring 2022 Green500 list, which ranks supercomputers by their flops per watt. The Oak Ridge team accomplished this by delivering those 1.102 Linpack exaflops in a 21.1-megawatt power envelope, an efficiency of 52.23 gigaflops per watt (which works out to one exaflops at 19.15 megawatts). This puts the system well within the 20-megawatt exascale power envelope target set by DARPA in 2008—a target that had been viewed with much skepticism over the ensuing 14 years. Frontier was only outpaced in efficiency by its own 1-cabinet test and development system (Frontier TDS, aka “Borg”), which delivered 62.68 gigaflops per watt.

(This is not to say that Frontier is a low-energy system: ORNL is a direct-serve customer of the Tennessee Valley Authority, and in a press briefing, ORNL Director Thomas Zacharia said that “when you start the [Linpack] run, the machine, in less than ten seconds, begins to draw an additional 15 megawatts of power … that’s a small city in the U.S., that’s roughly about how much power the city of Oak Ridge consumes.”)

A challenging enterprise

Bringing a system like Frontier together over the course of the pandemic was no small feat. “We as a community, as a laboratory, as collaborators, we had to make a difficult decision,” Zacharia said. He explained that—before the Covid vaccines were available—much of the Oak Ridge staff involved in Frontier still came to work during the pandemic to deliver on the system while ameliorating Covid spread as much as possible through measures like contact tracing. Further, he said, HPE dedicated 18 people to “scouring the world for parts small and large in order to build a system to deliver on scope, schedule and budget” during the pandemic and the ensuing supply chain disruptions. “I don’t think that we would have been able to deliver this machine but for the fact that [Cray] is now HPE,” Zacharia said, “because it required the global region, the size of HPE, in order to tackle the supply chain issues.”

“To go from where we were seven months ago: battling an unprecedented global supply chain shortage, battling a pandemic … and delivering a first-ever exascale system with a first-ever CPU, first-ever GPU and a new fabric is truly incredible,” added Justin Hotard, EVP and GM for HPC and AI, in the press briefing. “To me, it’s the supercomputing equivalent of going to the moon.”

“Officially breaking the Linpack exascale barrier with a system comprised of new technologies and ahead of schedule is a testament to the thoughtful planning process and long-term vision of everyone associated with this machine,” commented Bob Sorensen, senior vice president of research for Hyperion Research. “I expect that just like the Roger Bannister four-minute mile, we will see this milestone number surpassed with some frequency in the next few years, but only one machine did it first. So the wait is over, we can all breathe a sigh of relief and move on to seeing the scientific and engineering advances enabled by Frontier as the system begins to address to the important real-world workloads for which it was designed.”

Moonshots

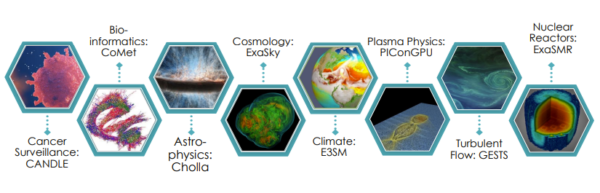

Indeed, both Hotard and Zacharia took care to emphasize the enormous impacts that Frontier stands to have across a range of scientific fields. (“This is really about the investments that we’re making in tackling science, right?” Zacharia said.) Across the press briefing, they mentioned applications ranging from materials research for energy storage in electric vehicles to regional climate simulations with resolved clouds and rain to in-depth studies of long Covid. There will, of course, be countless others—some enumerated in the graphic below—and Zacharia said that research aimed at the Gordon Bell Prize will be seen on the system by September of this year.

The Oak Ridge team is now beginning its “very rigorous acceptance testing process” for Frontier and says that it is on track for full operational availability by its previously set target date of January 1, 2023.

“It’s a proud moment, certainly, for Oak Ridge, for HPE, for AMD,” Zacharia said. “It’s a proud moment for our nation.”

The future

While we couldn’t pass up the Star Trek reference (nor could ORNL, which named Crusher, Frontier’s other – 1.5 cabinet – test system, after Beverly and Wesley Crusher), questions remain as to whether this really is the final Frontier. Asked whether the system could be expanded in the future, Zacharia said: “It is the full expanse of the current machine. We certainly have more power and space available to us. We don’t know what the future holds.”

But for now, with Frontier delivered, eyes will turn to Argonne National Laboratory’s Aurora, the second of the three U.S. exascale systems. Aurora’s installation is now underway and delivery of the Intel-based system (which is targeting “over two exaflops of peak performance”) is currently expected within 2022. After Aurora will be Lawrence Livermore National Laboratory’s El Capitan system, also from HPE and AMD and expected in 2023.